MiRo

MiRo-E – Educational Robot

MiRo-E is a highly specified, flexible, low-cost, programmable AI robotic platform, developed to mimic familiar domestic animal-like behaviour.

Each MiRo-e includes 100 hours of access to MiRoCode, MiRo’s cloud based programming software.

Product overview

After extensive research and the remarkable success of the developer version MiRo-E is now available to schools!

MiRo-E is a highly specified, flexible, low-cost, programmable AI robotic platform, developed to mimic familiar domestic animal-like behaviour.

- Works straight out of the box exhibiting pet-like behaviour in pastoral / demonstration mode.

- Flexible and can be used from primary school to university.

- 3rd generation MiRo has been completely re-engineered and evolved for education.

- Developed for learning robotic coding from first principles to high level.

- Easy-to-learn programming app has been specially designed.

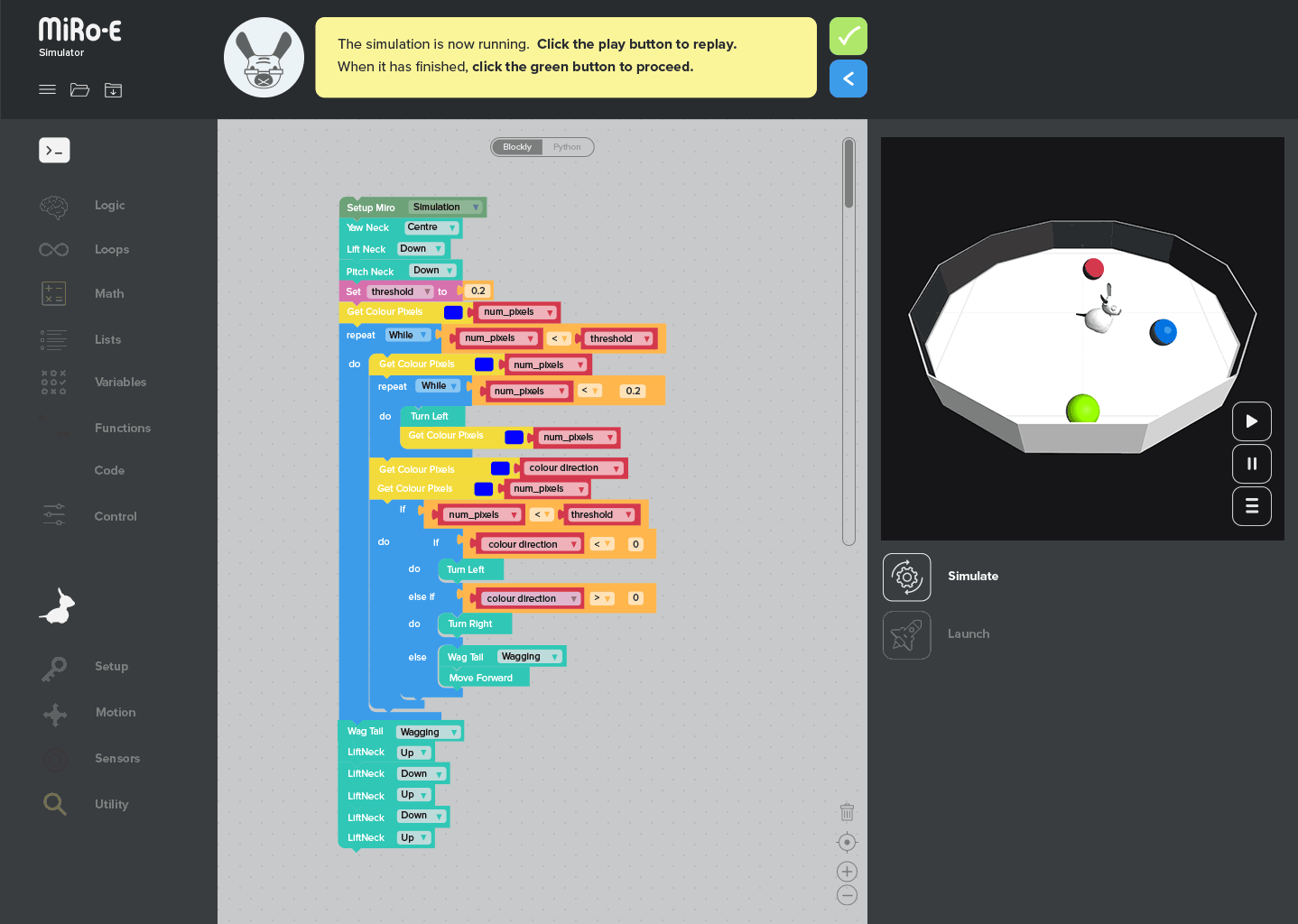

- Simulate MiRo’s behaviour on screen using browser-based Gazebo app, this means a whole class can work on programming and only need one or two MiRo’s per classroom.

- Emotionally Engaging personality friendly and familiar.

- State of the Art Biomimetic AI technology replicates a small mammal’s behaviour.

- It has a powerful Raspberry Pi B 3+ processor incorporated and loaded with software.

- Fully programmable, serviceable and upgradeable by a user with moderate experience.

- The unique Brain Based Biomimetic Control System [3BCS] developed by Sheffield University and in Bristol Robotic Lab.

- Communicates using WiFi / Bluetooth / USB, which allows:

- Bluetooth set-up and behaviour configuration using MiRo-E smart phone App.

- WiFi gives access to higher power computing and cloud services.

- MiRo-E is trained to react to humans and each other using behaviour, sound and light. It is autonomous in demonstration / pastoral care mode. It responds to touch, stroking and sound. It is a proven flexible & useful tool for sophisticated university research. It could also potentially help with therapy for neurological conditions [from SEN to dementia].

- MiRo-E arrives with the required software loaded.

- There’s a 3D robot simulator (Gazebo) for speedy development using one MiRo-E per class.

- MiRo-E is proving to be useful as a pastoral and cognitive therapy tool for:

- Special Educational Needs in children and adults.

- High level university research looking to hack robots or do their own custom programming.

- Aged care companion robot.

- A classroom pet in early school learning [pastoral care mode].

- Primary schools using the simple-to-grasp MiRoSim interface.

- Secondary schools using Blockly, C++, Python programming languages.

- Special Educational needs.

- Research into therapy for age related issues.

- We would suggest students below age 7 interact primarily with the physical MiRo-E (to explore simple STEM, Robotics, Engineering and Programming concepts) and students older than 7 start utilising the MiRo educational interface.

- Only one MiRo-E is needed per classroom.

- As MiRo-E’s interact wonderfully with each other so two or three per class is an ideal.

- The browser-based simulator interface means individual pupils can learn to code and see how the virtual MiRo-E will behave on screen before they download to the physical robot.

- MiRo-E comes with a rechargeable battery pack and inline charger.

- The battery life varies with usage, but it is approximately 6+ hours active and 12+ hours standby.

- MiRo-E, with the right know-how and programming, could be manipulated by advanced users to perform a wide range of custom functions:

- Stereo HD vision could be programmed for:

Facial recognition and analysis of mood and body language

Situational awareness

Optical navigation using objects and ceiling for reference points

User-programmed applications for the quad microphones might include:

Acoustic mapping to understand where sounds are coming from

Respond to ambient noise so behaviour reacts to it

Obey certain voice commands

Analysis of voice detecting abnormal stress or excitement.

Situational awareness - WiFi /Bluetooth/USB then could give access to:

Higher power computing for more sophisticated tasks such as SLAM navigation and sophisticated facial recognition analysis

Operation in remote environments for security and surveying

Cloud services such as Alexa, Siri and Google

The only constraint is your skill and imagination!

Technical Specifications

Physical

Weight: 3.3 kg (2.9 kg without battery pack)

Wheel track: 164 mm

Wheel diameter: 90 mm

Maximum forward speed: 400 mm/sec

Power: NiMH 4.8V 10Ah

Battery life: Varies with usage: typically 6+ hours active, 12+ hours standby

Sensors

4 × Microphones[1]: 16-bit @ 20kHz

2 x Cameras [2]: 1280×720 @ 15fps, 640×360 @ 25fps, 320×240 @ 35fps

1 x Sonar [3]: Proximity sensor in nose (3cm up to 1m)

28 × Touch: 14 in body, 14 in head; capacitive

4 × Light: Spread around body skirt

2× Cliff [4]: Front edge of body skirt

2 × Motion: 1 opto sensor in each wheel (also back EMF)

3 × Position: 1 position sensor in each body joint

2 × Accelerometer: 1 in body, 1 in head

1 x Voltage

1 x Battery voltage

[1] Two primary microphones in the base of the ears are supplemented by a noise-rejection microphone inside the head and an additional external microphone in the tail.

[2] Other frame sizes and aspect ratios are available; frame rate can be adjusted freely between 1.0 fps and the listed maximum.

[3] Sonar reflections are more reliable at shorter ranges—sensor will report good reflectors at up to 1 metre.

[4] Cliff sensors can be fooled by varying lighting conditions and/or presence of unrelated objects and by backwards motion; users should not assume they will be sufficient to prevent the robot driving off edges.

Actuators

2 × Main wheels: Differential drive

3 × Body joints: Lift, yaw, and pitch

Cosmetic

2 × Tail (wag/droop): Wagging (side-to-side) and droop (up-and-down) motions

2 x Ears (rotate): Left and right ear rotate independently

2 x Eyelids (open/close): Two eyelids open and close independently

Supplementary

6× Illumination: RGB illumination LEDs shine through the body shell, three on each flank

Sound output: Streaming audio digitised at 8kHz

Processing

Embedded Stack

P1: 3× STM32F030

ARM Cortex M0 @ 24MHz

8kB SRAM

64kB FLASH ROM

P2:1× STM32H743

ARM Cortex M7 @ 400MHz

1MB SRAM

2MB FLASH ROM

On-board Computer

P3: 1× Raspberry Pi 3B+

ARM Cortex A53 Quad Core @ 1.4GHz

1GB LPDDR2 RAM

16GB uSD FLASH ROM

Bluetooth, WiFi, USB expansion ports